Issue

I have built a web scraper using bs4, where the purpose is to get notifications when a new announcement is posted. At the moment I am testing this with the word 'list' instead of all announcement keywords. For some reason when I compare the time it determines a new announcement has been posted versus the actual time it was posted in the website, the time is off by 5 minutes give or take.

from bs4 import BeautifulSoup

from requests import get

import time

import sys

x = True

while x == True:

time.sleep(30)

# Data for the scraping

url = "https://www.binance.com/en/support/announcement"

response = get(url)

html_page = response.content

soup = BeautifulSoup(html_page, 'html.parser')

news_list = soup.find_all(class_ = 'css-qinc3w')

# Create a bag of key words for getting matches

key_words = ['list', 'token sale', 'open trading', 'opens trading', 'perpetual', 'defi', 'uniswap', 'airdrop', 'adds', 'updates', 'enabled', 'trade', 'support']

# Empty list

updated_list = []

for news in news_list:

article_text = news.text

if ("list" in article_text.lower()):

updated_list.append([article_text])

if len(updated_list) > 4:

print(time.asctime( time.localtime(time.time()) ))

print(article_text)

sys.exit()

The Response when the length of the list increased by 1 to 5 resulted in printing the following time, and new announcement: Fri May 28 04:17:39 2021, Binance Will List Livepeer (LPT)

I am unsure why this is. At first I thought I was being throttled, but looking again at robot.txt, I didn't see any reason why I should be. Moreover I included a sleep time of 30 seconds which should be more than enough to web scrape without any issues. Any help or an alternative solution would be much appreciated.

My question is:

Why is it 5 minutes behind? Why does it not notify me once the website posts it? The program takes 5 minutes longer to recognize there is a new post in comparison to the time it is posted on the website.

Solution

from xrzz import http ## give it try using my simple scratch module

import json

url = "https://www.binance.com/bapi/composite/v1/public/cms/article/list/query?type=1&pageNo=1&pageSize=30"

req = http("GET", url=url, tls=True).body().decode()

key_words = ['list', 'token sale', 'open trading', 'opens trading', 'perpetual', 'defi', 'uniswap', 'airdrop', 'adds', 'updates', 'enabled', 'trade', 'support']

for i in json.loads(req)['data']['catalogs']:

for o in i['articles']:

if key_words[0] in o['title']:

print(o['title'])

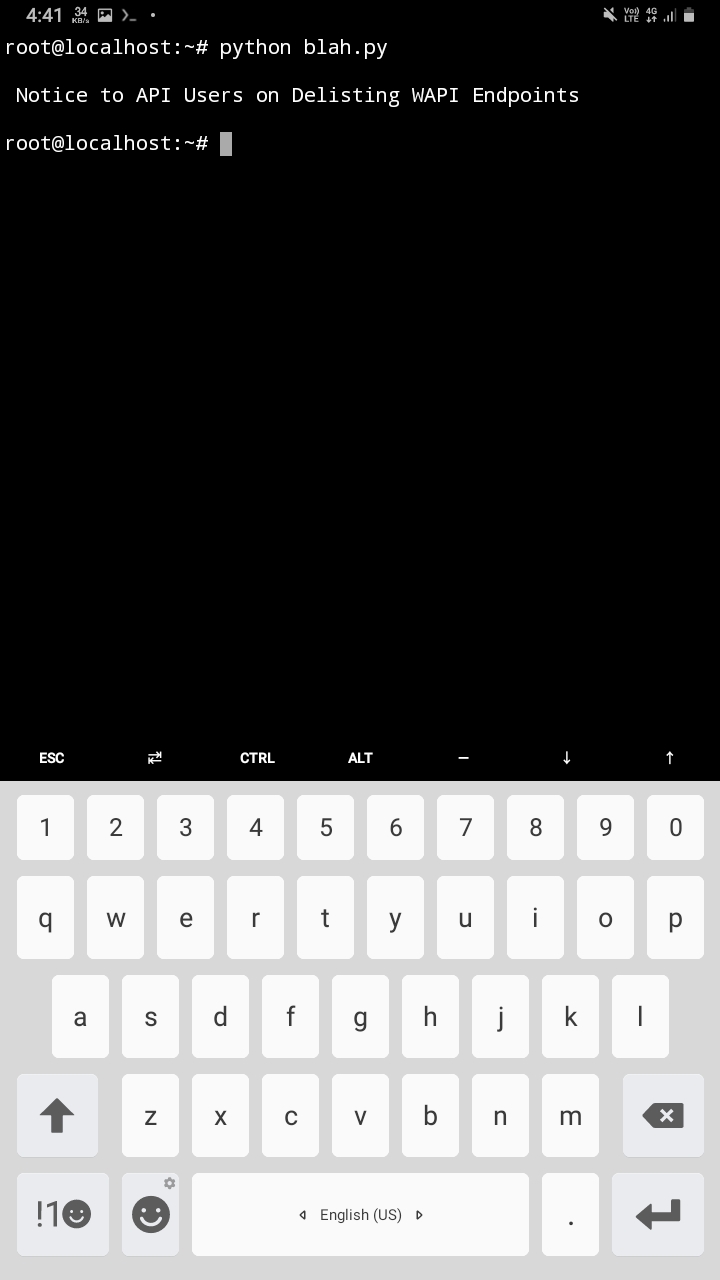

Ouput:

Answered By - dedshit

0 comments:

Post a Comment

Note: Only a member of this blog may post a comment.